Table of contents

- Introduction

- Prompts as Code

- Prompt as Configuration

- Hybrid Approaches

- Automated Testing:

- Conclusion

Strategies for Efficient Prompt Engineering and Language Model Optimization

Introduction

Have you ever pondered whether handling prompts could be made simpler?

Good news! Whether you're treating prompts like code or configs, we've got the strategies to make LLM prompt management a breeze—no more guesswork, just smooth and scalable solutions. 🚀😲

Dive in and master prompt control today!

The Role Of Prompts in LLMs

The foundation of successful AI interactions are prompts, which direct how models like GPT-4 or PaLM comprehend the context and produce significant outcomes for applications, user experience, and brand coherence.

Prompt management has emerged as a crucial subject for AI engineers and developers. One major dilemma that arises when prompts become more prevalent is whether they should be handled as configurations or as code. This decision affects responsibilities for managing AI-driven interactions and the behavior of the application.

Why Prompt Engineering is Important??

Saves Time

Facilitates complex tasks

Improves user experience

Enables better outcomes

Why Prompt Management Shapes AI's Personality?

Aligns with Brand Voice: By crafting prompts that reflect the company's values and style, AI maintains consistency with the brand’s identity, fostering trust.

Specificity Matters: Be detailed about desired outcomes, length, and style.

Example-Driven Output: Provide examples in prompts to illustrate the expected format.

Clarity and Structure: Start with clear instructions, using symbols to separate them from context.

Maximize Output Control: Utilize

max_tokensandstop sequencesto control output length and relevance.[Here]

For example, many AI-powered chatbots and virtual assistants have faced criticism for generating biased or harmful content. Some of them are:

EU AI Act sets a precedent with $37 million fines for non-compliance.

Microsoft shuts down AI chatbot after it turned into racist Nazi

Prompts as Code

Prompt management gains the accuracy and rigor of traditional software development when prompts are treated like code, enabling version control and better-organized workflows.

Microsoft has its own GenAI Script guides here.

I’ve started to think of my JSON prompts like code with methods including a main() method (task/steps), properties, arguments (options), and outputs, with the LLM as the code interpreter. [Here]

{

"schema_version": "v1",

"author": "@fullstackciso",

"app_name": "Cyber Security Awareness Coach",

"app_version": "0.1",

"system": {

"task": "Act as a Cyber Security Awareness Coach. Teach the user about all aspects of cybersecurity.",

"steps": [

"1: Ask the student to rate their current skill level.",

"2: Ask the student to select a cybersecurity topic.",

"3: Provide information based on the selected topic."

],

"properties": {

"persona": {

"description": "The persona you will assume.",

"hidden": true,

"value": "A friendly expert CyberSecurity Awareness coach."

}

},

"options": {

"emojis": { "value": true },

"echo": { "value": true },

"markdown": { "value": true },

"verbosity": { "value": "medium" }

}

},

"init": [

"exec <version>",

"exec <steps> silent",

"exec <help> + <options>"

]

}

Version Control for Prompts: For accountability and traceability, use tools such as Git to record, monitor, and roll back prompt modifications.

Rollback Capability: Reduce interruptions from unanticipated problems by quickly returning to earlier configurations.

Testing Requirement: Put test suites into place to verify timely results and prevent unforeseen modifications.

Use of Validation Tools: Make that prompts generate the expected responses by using tools such as Pytest or custom scripts.

CI/CD Integration: Include prompts for staged updates in pipelines for Continuous Integration and Continuous Deployment.

Consistency Across Environments: To preserve dependability, make sure that what works in development also works in production.

Prompt as Configuration

Versatility is provided by treating cues as configurations, particularly in dynamic systems where fast modifications are crucial. With this approach, developers may make changes in real-time without having to reinstall the entire application.

Fast iterations provide prompt reactions to user input.

Modifications to prompts stored in configuration files can be made instantly.

In response to user feedback and changing needs, the LLM can modify its behavior.

Hybrid Approaches

Imagine a world in which prompts gracefully switch between configurations and code, adjusting to the requirements of the application! 💃🕺 Developers can regulate prompts according to their criticality thanks to the hybrid approach, which combines strict control with flexibility. 🚀

Critical vs. Non-Critical Prompts: Picture a stage where the stars shine bright! 🌟 Some prompts need the spotlight—rigorous control and all. Others? They can shimmy and shake with a bit more freedom! 🎉🎈

Prompt Lifecycle Management: Welcome to the prompt party! 🎊 From the thrilling birth of prompts in development to their grand entrance in production, it’s all about managing the lifecycle. Think of it like throwing a fabulous bash, where every change is a well-planned move to keep the energy high! ⚡🎶

Unexpected Impact of Prompt Changes: Buckle up! 🎢 Changes to prompts can send shockwaves through the application universe! 🌌 What might seem like a small tweak can create a whirlwind of effects, making users cheer or groan. So, always keep an ear to the ground for feedback and adjust the beat when needed! 🥳📣

Compliance Risks 🚩🚨

Prompts carry a considerable risk of producing content that deviates from legal or regulatory requirements, which could put the company in hot water legally.

Brand Consistency: Brand integrity and recognition may be compromised by inconsistent prompts that produce content that deviates from the company's stated voice or values.

User Experience: When prompts yield ambiguous or irrelevant answers, users may become frustrated and dissatisfied.

Real-Time Proofing and Quality Control: For prompts to be compliant, consistent, and in line with the organization's standards and goals, real-time proofing and quality control procedures must be put in place.

Ethical Consideration:

Prompt engineering gives us power, but we have to use caution.

Steer clear of developing prompts that result in inaccurate, biased, or damaging content.

Avoid suggestions that distribute misleading information or reinforce stereotypes.

Be sure to reveal any AI-generated content you use. Transparency guarantees ethical use and fosters confidence.

Automated Testing:

Ensuring the Quality Before Deployment

Ensuring the quality and dependability of prompts is crucial in the fast-paced world of software development.

To reduce the possibility of problems occurring in production, automated testing has become an essential tactic for validating changes before to deployment.

Tools?

Automated Testing Frameworks: Developers can model user interactions with apps using tools like Selenium, Pytest, and Jest.

Verification of Output: These tests make sure prompts produce the desired results in various situations, which aids in the early detection of problems.

Before integrating, unit testing verifies that each component of prompt logic functions as intended.

A/B Testing

Organizations usually employ A/B testing to validate changes in real-world circumstances after creating and testing prompts. To gather information on a set of users' interactions and preferences, many prompt versions are distributed to them.

Monitoring Before Deployment

User preferences and behavior are revealed through A/B testing.

Risk Mitigation serves as a defense against unforeseen adverse effects.

Before being widely implemented, new prompts are observed to find problems.

Assists in avoiding detrimental effects on compliance and user experience.

Makes the full-scale deployment go more smoothly.

Monitoring After Deployment

The Monitoring helps detect anomalies, measure user engagement, and identify improvement areas. Using analytics tools such as Google Analytics, Mixpanel, or Amplitude, organizations can track real-time performance metrics. Also for these reasons as well:

Constant monitoring is crucial for sustaining performance after deployment.

Identify irregularities and efficiently gauge user involvement.

User feedback: To determine pain points, collect information via surveys and face-to-face conversations and community building like the #hashnode one.

Relevance & Effectiveness: Make sure that over time, prompts continue to be relevant and in line with user demands.

Super Best Practices and Patterns

To effectively implement automated testing, A/B testing, and continuous monitoring, organizations should adhere to several best practices:

To properly direct decision-making, specify what success looks like for each test.

To save manual labor and expedite validation procedures, make use of automated testing solutions.

To promote continuous improvement, change prompts frequently in response to user feedback and performance indicators.

To guide future innovations and enhance teamwork, record testing procedures, A/B test outcomes, and monitoring data.

Ensure Cross-Functional Collaboration

CHEAT SHEET: Here

Recommendations for Effective Prompt Management

An organized strategy is necessary in the field of prompt management to guarantee high-quality interactions and preserve consistency across apps.

Actionable Recommendations:

Establish Compliance Criteria: Clearly state the requirements for compliance as well as the tone and style.

Verify Before Deployment: To guarantee timely quality, use automated testing.

Perform A/B Testing: To determine efficacy, test variations regularly.

Monitor Performance Constantly: Utilize analytics software to track timely performance.

Establish Feedback Loops: To ensure continuous progress, solicit user input.

Importance of Documentation and Knowledge Sharing:

To preserve the integrity of the timely development process, documentation is essential. Thorough documentation is beneficial.

Maintain Consistency: Ensures team members adhere to established standards.

Preserve Knowledge: Important knowledge is kept intact over time by recording insights from testing, user input, and performance monitoring.

Encouraging Collaboration Between Developers and AI Specialists:

Encouraging collaboration between developers and AI experts is crucial for effective management. This cross-functional teamwork can lead to:

Increased Creativity

Shared Learning

Future Trends and Innovations in Prompt Management

Dynamic Prompts Based on Real-Time Feedback: For increased relevance, modify prompts in response to real-time user feedback.

Generative AI for Optimal Prompt Suggestions: Use AI to recommend effective prompts tailored to user interactions.

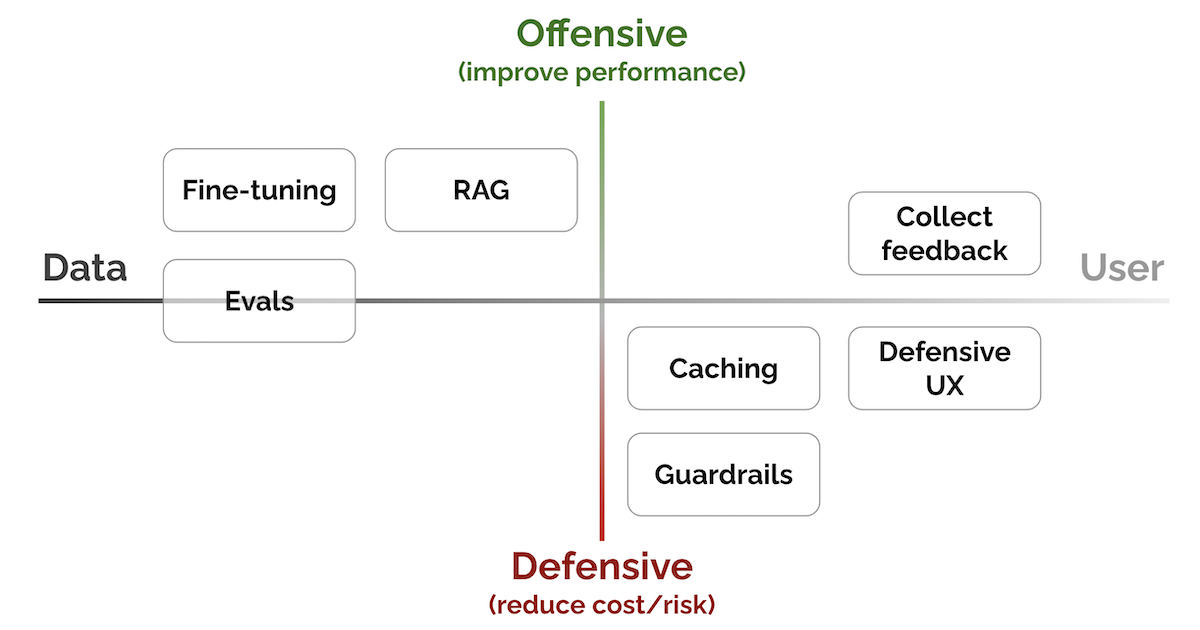

Patterns for Building LLM-based Systems & Products

Evals: Track regressions and improvements by measuring the model's performance.

RAG (Retrieval-Augmented Generation): Use outside information to improve LLM results and lessen hallucinations.

Fine-tuning: To increase accuracy and relevance, customize models for certain activities.

Caching: Store frequently accessed data to reduce latency and expenses.

Guardrails: Put safety precautions in place to guarantee high-quality output.

Designing interfaces that gracefully handle and anticipate user failures is known as defensive UX.

Conclusion

Effective prompt management is crucial for achieving high-quality outcomes in AI-driven Apps. Qualifire Generative AI offers amazing opportunities alongside new risks and pitfalls. By protecting both your users and your brand from potential AI-related incidents, Qualifire provides real-time monitoring and evaluation of AI outputs, ensuring they adhere to your organization’s standards, regulatory requirements, and quality policies.

We encourage you to evaluate your current prompt management strategies, identify areas for improvement, and promote collaboration between teams. By prioritizing effective prompt management, you can boost AI performance and drive user satisfaction. Engage with Qualifire for further discussion or collaboration, and take action today to enhance your practices and succeed in the evolving AI landscape. Visit us at Qualifire(Gilad or Dror) for more information. If you have any other ideas on LLM and Prompt Management let us know by booking a call.

References

"Best Practices for Prompt Engineering with LLMs" by Open AI (2023)

"Prompt Management Strategies in AI Applications" by Stanford AI Lab (2023)

"The Role of Prompts in Large Language Models" in AI Developer Journal (2023)

If you found this blog post helpful, please consider sharing it with others who might benefit.

For paid collaboration, feel free to Connect with me on Twitter, LinkedIn, and GitHub.

Thank you for reading! 😊

#AI #LLM #QUALIFIRE